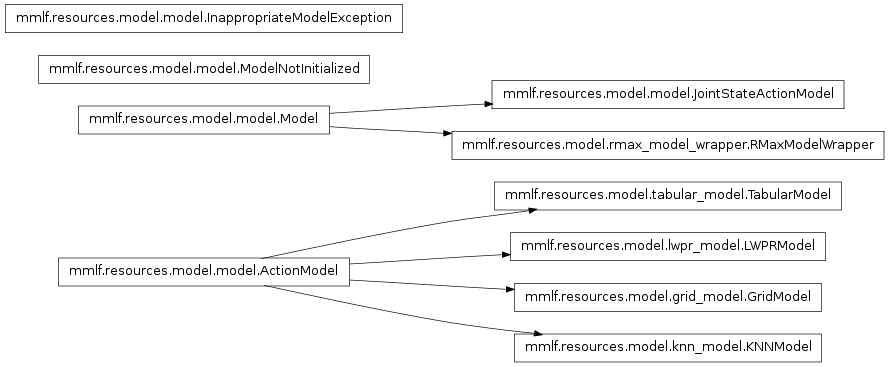

Models¶

Interfaces for MMLF models

This module contains the Model class that specifies the interface for models in the MMLF. The following methods must be implemented by each model class:

- addExperience

- sampleStateAction

- sampleSuccessorState

- getSuccessorDistribution

- getExpectedReward

- getExplorationValue

The standard way of implementing a model is to learn a model for each action separately. In order to simplify this task, the Model interface contains a standard implementation in the case that an ActionModelClass parameter is passed to constructor. This parameters must be a class that implements the interface ActionModel that is also contained in this module. If the ActionModelClass parameter is passed to the Model constructor, for each action one instance of it is constructed an all methods are per default forwarded to the respective method of the ActionModel.

- class resources.model.model.Model(agent, userDirObj, stateSpace, actions, ActionModelClass=None, cyclicDimensions=False, **kwargs)¶

Interface for MMLF models

This class specifies the interface for models in the MMLF. The following methods must be implemented by each model:

- addExperience

- getSample

- sampleSuccessorState

- getSuccessorDistribution (optional)

- getExpectedReward

- getExplorationValue (optional)

- Additional, this class already implements the following methods:

- addStartState

- addTerminalState

- drawStartState

- getNearestNeighbor

- getNearestNeighbors

- isTerminalState

- plot

The class contains a standard implementation in the case that an ActionModelClass parameter is passed to constructor. This parameters must be a class that implements the interface ActionModel that is also contained in this module. If the ActionModelClass parameter is passed to the Model constructor, for each action one instance of it is constructed an all methods are per default forwarded to the respective method of the ActionModel.

- The constructor expects the following parameters:

agent : The agent that is using this model

userDirObj : The userDirObj object of the agent

- actions : A sequence of all allowed actions

(note: discrete action space assumed)

- ActionModelClass : A class implementing the ActionModel interface

If specified, for each available action a separate instance is created and all method calls are forwarded to the respective instance

- cyclicDimensions If some (or all) of the dimensions of the state space

are cyclic (i.e. the value 1.0 and 0.0 are equivalent) this parameter should be set to True. Defaults to False.

- addExperience(state, action, succState, reward)¶

Updates the model based on the given experience tuple

Update the model based on the given experience tuple consisting of a state, an action taken in this state, the resulting successor state succState and the obtained reward.

- addStartState(state)¶

Add the given state to the set of start states

- addTerminalState(state)¶

Add the given state to the set of terminal states

- static create(modelSpec, agent, stateSpace, actionSpace, userDirObj)¶

Factory method that creates model-learners based on spec-dictionary.

- drawStartState()¶

Returns a random start state

- getConfidence(state, action)¶

Return how confident the model is in its prediction for the given state action pair

- getExpectedReward(state, action)¶

Returns expected reward when action is performed in state

- getExplorationValue(state, action)¶

Returns how often the pair state-action has been explored

- static getModelDict()¶

Returns dict that contains a mapping from model name to model class.

- getNearestNeighbor(state)¶

Returns the most similar known state to the given state

- getNearestNeighbors(state, k, b)¶

Determines k most similar states to the given state

Determines k most similar states to the given state. Returns an iterator over (weight, neighbor), where weight is the guassian weigthed influence of the neighbor onto state. The weight is computed via exp(-dist/b**2)/sum_over_neighbors(exp(-dist_1/b**2)). Note that the weights sum to 1.

- getPredecessorDistribution(state, action)¶

Returns an iterator that yields predecessor state probabilities

Return an iterator that yields the pairs of state along with their probabilities of being the predecessor state of state when action is performed by the agent. Note: This assumes a discrete (or discretized) state space since

otherwise this there will be infinitely many states with probability > 0.

- getSample()¶

Return a sample drawn randomly

Return a random sample (i.e. a state, action, reward, successor state 4-tuple).

- getStates()¶

Return all states that are contained in the example set or are terminal

- getSuccessorDistribution(state, action)¶

Returns an iterator that yields successor state probabilities

Return an iterator that yields the pairs of state along with their probabilities of being the successor state of state when action is performed by the agent. Note: This assummes a discrete (or discretized) state space since

otherwise this there will be infinitely many states with probability > 0.

- isTerminalState(state)¶

Returns an estimate of whether the given state is a terminal one

- samplePredecessorState(state, action)¶

Return sample predecessor state of state-action.

Return a state drawn randomly from the predecessor distribution of state-action

- sampleSuccessorState(state, action)¶

Return sample successor state of state-action.

Return a state drawn randomly from the successor distribution of state-action

- class resources.model.model.JointStateActionModel(agent, actionRange, actionSpace, ActionModelClass, **kwargs)¶

Interface for models for continuous action spaces.

The JointStateActionModel subclasses Model and changes its default behaviour: Instead of forwarding every action to a separate ActionModel, state and action are concatenated and one ActionModel is used to learn the behaviour within this “State-Action-Space”, i.e. a mapping from (state, action_1) -> (succState, action_2). Since action_2 depends on the policy, it cannot be learned by a model and is thus ignored. For training of the ActionModel, action_2 is set to action_1.

NOTE: Currently, only one action dimension is supported!

The constructor expects two parameter: * actionRange : A tuple indicating the minimal and maximal value of the

action space dimension.- ActionModelClass : A class implementing the ActionModel interface. This

class is used to learn the State-Action Space dynamics.

- addExperience(state, action, succState, reward)¶

Updates the model based on the given experience tuple

Update the model based on the given experience tuple consisting of a state, an action taken in this state, the resulting successor state succState and the obtained reward.

- getConfidence(state, action)¶

Return how confident the model is in its prediction for the given state action pair

- getExpectedReward(state, action)¶

Returns expected reward when action is performed in state

- getExplorationValue(state, action)¶

Returns how often the pair state-action has been explored

- getPredecessorDistribution(state, action)¶

Returns an iterator that yields predecessor state probabilities

Return an iterator that yields the pairs of state along with their probabilities of being the predecessor state of state when action is performed by the agent. Note: This assumes a discrete (or discretized) state space since

otherwise this there will be infinitely many states with probability > 0.

- getSample()¶

Return a sample drawn randomly

Return a random sample (i.e. a state, action, reward, successor state 4-tuple).

- getSuccessorDistribution(state, action)¶

Returns an iterator that yields successor state probabilities

Return an iterator that yields the pairs of state along with their probabilities of being the successor state of state when action is performed by the agent. Note: This assumes a discrete (or discretized) state space since

otherwise this there will be infinitely many states with probability > 0.

- samplePredecessorState(state, action)¶

Return sample predecessor state of state-action.

Return a state drawn randomly from the predecessor distribution of state-action

- sampleSuccessorState(state, action)¶

Return sample successor state of state-action.

Return a state drawn randomly from the successor distribution of state-action

- class resources.model.model.ActionModel(stateSpace, *args, **kwargs)¶

Interface for MMLF action models

- addExperience(state, succState, reward)¶

Updates the action model based on the given experience tupel

- getConfidence(state)¶

Return how confident the model is in its prediction for the given state

- getExpectedReward(state)¶

Return the expected reward for the given state under this action

- getExplorationValue(state)¶

Returns the exploration value for the given state

- getPredecessorDistribution(state)¶

Iterates over pairs of predecessor states and their probabilities

- getSuccessorDistribution(state)¶

Iterates over pairs of successor states and their probabilities

- samplePredecessorState(state)¶

Sample a predecessor state for this state for this action-model

- sampleState()¶

Sample a state randomly from this action model

- sampleSuccessorState(state)¶

Sample a successor state for this state for this action-model

Grid-based¶

Grid-based model based on a grid that spans the state space.

- class resources.model.grid_model.GridModel(stateSpace, nodesPerDim, activationRadius, b=None, **kwargs)¶

Grid-based model based on a grid that spans the state space.

The state transition probabilities and reward probabilities are estimated only for the nodes of this grid. Experience samples are used to update the estimates of nearby grid nodes. Using a discrete grid has the advantage that there is only a finite amount of states for which probabilities needs to be estimated.

- CONFIG DICT

nodesPerDim: : The number of nodes of the grid in each dimensions. The total number of grid nodes is thus nodesPerDim**dims. activationRadius: : The radius of the region around the query state in which grid nodes are activated b: : Parameter controlling how fast activation decreases with distance from query state. If None, set to “activationRadius / sqrt(-log (0.01))”

K-Nearest Neighbors¶

An action model class based on KNN state transition modeling.

This model is based on the model proposed in: Nicholas K. Jong and Peter Stone, “Model-based function approximation in reinforcement learning”, in Proceedings of the 6th international joint conference on Autonomous agents and multiagent systems Honolulu, Hawaii: ACM, 2007, 1-8, http://portal.acm.org/citation.cfm?id=1329125.1329242.

- class resources.model.knn_model.KNNModel(stateSpace, k=10, b_Sa=0.10000000000000001, **kwargs)¶

An action model class based on KNN state transition modeling.

This model learns the state successor (and predecessor) function using the “k-Nearest Neighbors” (KNN) regression learner. This learner learns a stochastic model, mapping each state $s$ to the successor $s’ = s + (s^’_{neighbor} - s_{neighbor})$ with probability exp(-(||s - s_{neighbor}||/b_Sa)^2)/ sum_{neighbor in knn(s)} exp(-(||s - s_{neighbor}||/b_Sa)^2). The reward function is learned using an KNN model, too.

- CONFIG DICT

exampleSetSize: : The maximum number of example transitions that is remembered in the example set. If the example set is full, old examples must be deleted or now new examples are accepted. k: : The number of neighbors considered in k-Nearest Neighbors b_Sa: : The width of the gaussian weighting function. Smaller values of b_Sa correspond to increased weight of more similar states

Locally Weighted Projection Regression (LWPR)¶

An action model class based on LWPR state transition modeling.

- class resources.model.lwpr_model.LWPRModel(stateSpace, exampleSetSize=1000, examplesPerModelUpdate=10000, init_d=25, **kwargs)¶

An action model class based on LWPR state transition modeling.

This model learns the state successor (and predecessor) function using the “Locally Weighted Projection Regression” (LWPR) regression learner. This learner learns a deterministic model, i.e. each state is mapped onto the successor state the learner considers to be most likely (and thus not onto a probability distribution).

The reward function is learned using a nearest neighbor (NN) model. The reason that NN is used and not LWPR is that the reward function is usually non-smooth and every more sophisticated learning scheme might introduce additional unjustified bias.

This model stores a fixed number of example transitions in a so called example set and relearns the model whenever necessary (i.e. when predictions are requested and new examples have been addedsince the last learning). The model is relearned from scratch.

- CONFIG DICT

exampleSetSize: : The maximum number of example transitions that is remembered in the example set. If the example set is full, old examples must be deleted or now new examples are accepted. examplesPerModelUpdate: : The number of examples presented to LWPR before the learning is stopped init_d: : The init_d parameter for LWPR that controls the smoothness of the learned function. Smaller values correspond to more smooth functions.

R-Max Model Wrapper¶

A wrapper for models that changes them to have RMax like behavior

A wrapper that wraps a given model and changes its behavior to be RMax-like, i.e. return RMax instead of the reward predicted by model if the exploration value is below minExplorationValue. The implementation is based on the adapter pattern.

- class resources.model.rmax_model_wrapper.RMaxModelWrapper(model, RMax, minExplorationValue, **kwargs)¶

A wrapper for models that changes them to have RMax like behavior

A wrapper that wraps a given model and changes its behavior to be RMax-like, i.e. return RMax instead of the reward predicted by model if the exploration value is below minExplorationValue.

New in version 0.9.9.

- CONFIG DICT

minExplorationValue: : The agent explores in a state until the given exploration value (approx. number of exploratory actions in proximity of state action pair) is reached for all actions RMax: : An upper bound on the achievable return an agent can obtain in a single episode model: The actual model (this is only a wrapper arount the true model that implements optimism in the face of uncertainty)

Tabular Model¶

An action model class that is suited for discrete environments

This module contains a model that learns a distribution model for discrete environments.

- class resources.model.tabular_model.TabularModel(stateSpace, **kwargs)¶

An action model class that is suited for discrete environments

This module contains a model that learns a distribution model for discrete environments.

New in version 0.9.9.

- CONFIG DICT

exampleSetSize: : The maximum number of example transitions that is remembered in the example set. If the example set is full, old examples must be deleted or now new examples are accepted.